Learn American Sign Language using Mixed Reality (Hololens)? Yes, we can!

I came up with the idea of teaching American Sign Language using Mixed Reality while listening to Raja Kushalnagar of Gallaudet University at one of our weekly Brown Bag Lunch at HCIL. Raja used ASL to communicate his presentation with the help of a translator speaking aloud in English. I realized that if Raja were wearing a MR headset with advanced hand gesture recognition capability, it could recognize ASL, convert it into English and speak it out aloud. This would allow Raja to talk to virtually anyone without the need of a translator. I realized that mixed reality can also be used to teach ASL to people.

I started my research by investigating how ASL is currently being taught?

There are multiple phases to learn ASL

Phase 1: Learn alphabets

Phase 2: Learn common words

Phase 3: Learn grammar

Phase 4: Sign with other people

Why do people teach ASL using English?

It took me a while to ask this question. I assumed that the children who are hard of hearing or deaf will learn ASL the way hearing children learn English. But that is not the case, 9 out of 10 children are born to hearing parents, who don’t know ASL. This means that the child cannot learn it by just observing their parents. So, the parents of a hard of hearing child learn ASL with their child. This is the reason for a lot of online resources for teaching ASL to people who know spoken languages. What about that 1 child out of 10 who is born to deaf or hard of hearing parents who know ASL? They pick up ASL by observing their parents, just the way a hearing child learns English by listening to their parents.

It is interesting to note that hard of hearing or deaf children babble with their fingers too, before becoming proficient at ASL, just like hearing children do.

How can Mixed Reality (Hololens) improve instruction for ASL?

Concept 1

First interaction concept I explored was making one hand gesture appear in front of the user and then using the air tap gesture on Hololens to cycle through all the gestures. But this technique had a few problems:

1. The air tap gesture on Hololens was difficult to master and didn’t always work

2. Students want to compare two different gestures and the nuances between them. It would be difficult to compare two ASL gesture by cycling between them.

Concept 2

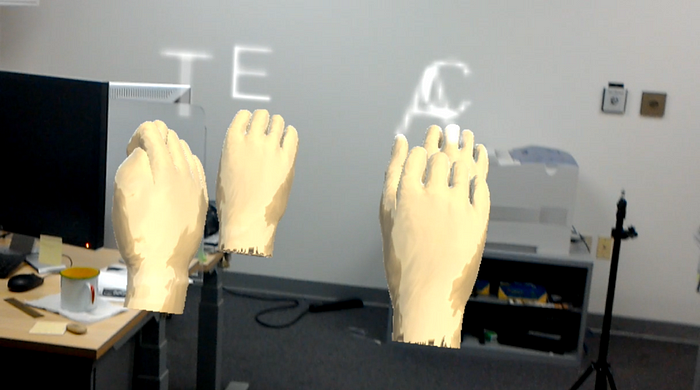

Another interaction technique I investigated was to show all the ASL signs at once but distributed in space. Benefits of this approach are:

1. The user can compare different hand gestures by just looking at the two gestures at once

2. Since the user is moving around the space, in addition to the muscle memory being developed by mimicking the hand gestures floating in space, the user is also building spatial memory which will help them in associating different gestures with different English alphabets.

3. The user can move around and look at gestures from different points of view, not possible when another person is demonstrating the gesture.

Prototyping

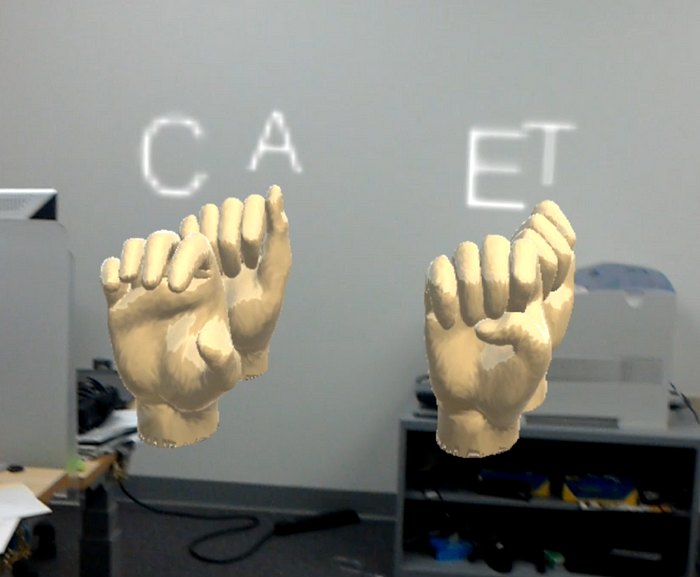

I used Hololens and Unity engine to create a prototype for this app. I searched for 3D models of hands with ASL signs, imported them into Unity and positioned them at chest level where the user would be able to see them and interact with them.

I tried two different default views: First person and Third person. In the first person view the hands are facing away from you, which would be the case if you were performing the gesture. In the third person view, the hands are facing towards you, which would be the case if someone was showing you how to perform a particular gesture. I found the third person view to be more useful as all the details of the fingers are visible to the user. Although the user can move to the other side in the first person view as well, but it would be one extra step for the user, resulting in greater friction.

Conclusion

Mixed Reality is a medium worth exploring for teaching skills based on kinesthetic learning. Although Hololens does have a small field of view, it can still be used as an effective teaching tool for American Sign Language. The number of people using ASL to communicate is upwards of 500,000 in the United States and Canada. Mixed reality can have a significant impact on the lives of hard of hearing and deaf children and their families.

Next Steps

This project was a proof of concept to test if mixed reality can be an effective tool for teaching America Sign Language alphabet. Mixed reality can be an effective medium for teaching motor skills. This project does not investigate the interaction techniques that will be needed to teach people to sign words, grammar and other gestures that make use of both hand movements and facial expressions. This will need a lot of 3D asset creation for the animations and capturing facial expressions and full body movements of people signing ASL is possible but will take a lot of resources and technological investment.

Another idea that I will be exploring is the use of cameras on a mixed reality headset to recognize ASL gestures signed by the wearer and convert them into speech. Another idea worth exploring is to record speech from people the user is talking to, convert it into text and ASL gestures performed by a virtual avatar floating in front of the user. This can greatly increase the people that a hard of hearing or deaf person can communicate with. Although these ideas need significant advances in camera technology, speech to text and fast real-time rendering on mixed reality devices.

Feel free to reach out to me if you have any questions or want to work together. (Hint: Hire me as a VR UX/ Developer :)